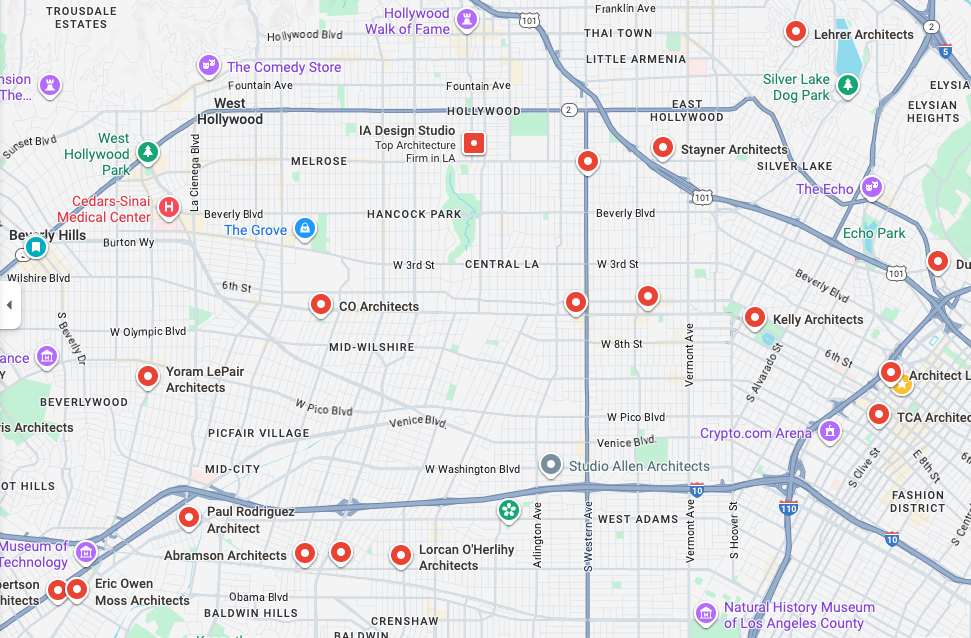

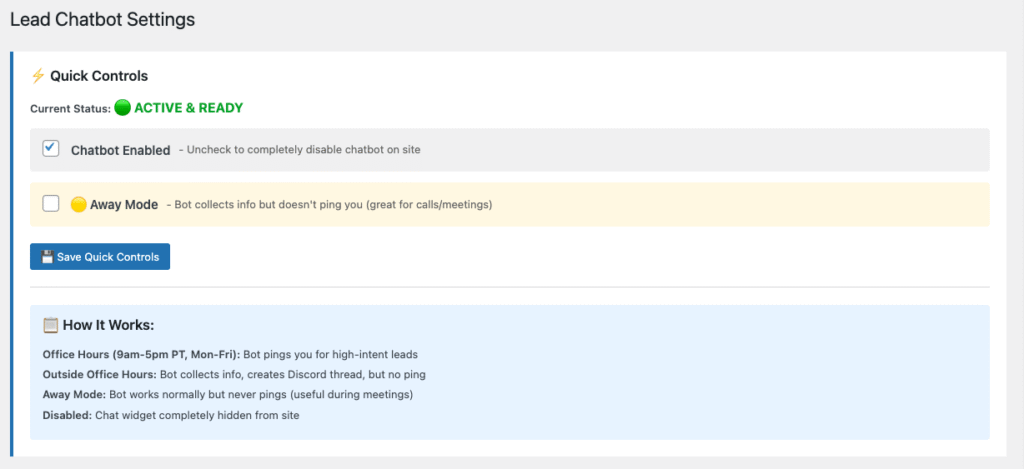

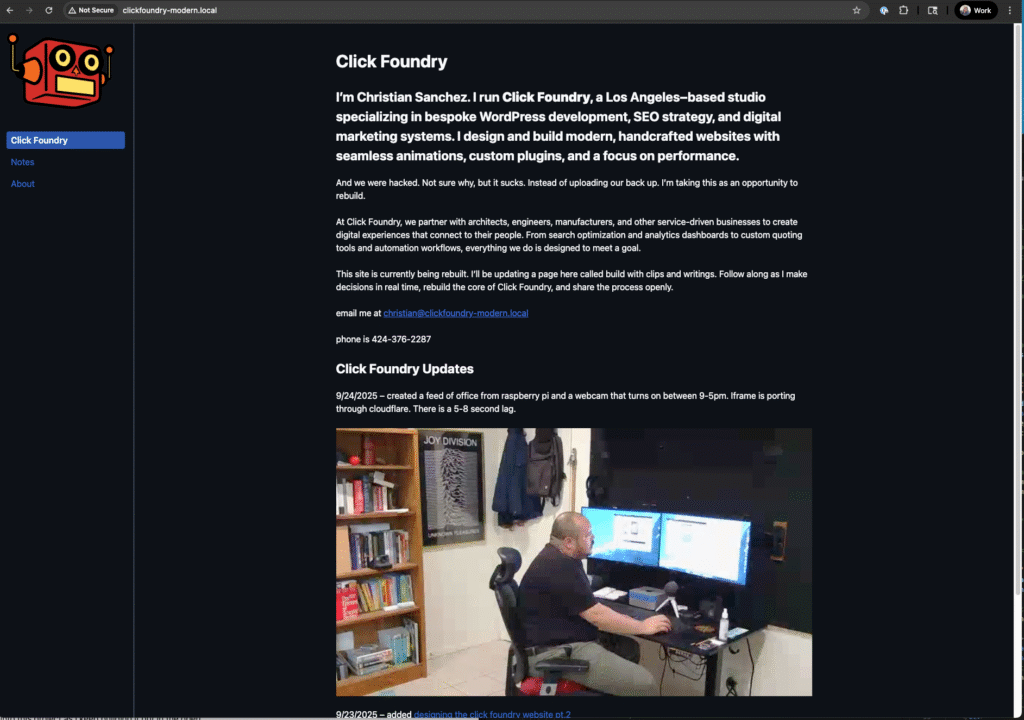

Happy 2026! Search Engine Optimization for architects is important particularly because organic search results are often captured by larger organizations even at the local level.

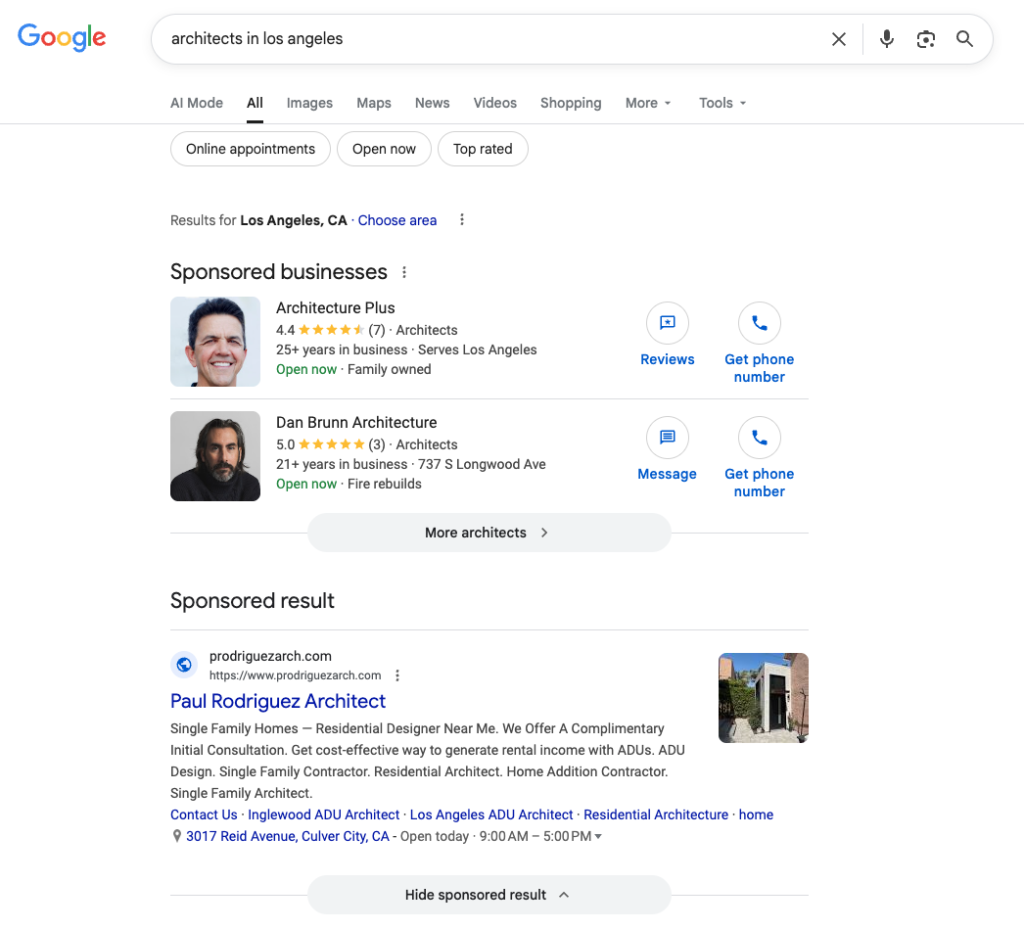

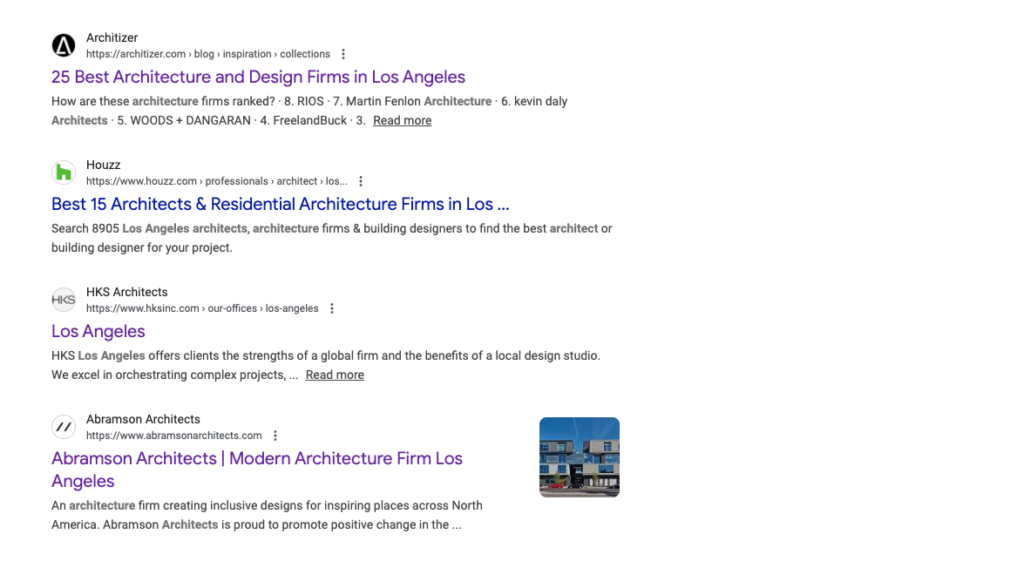

Results in general are buried. When I look up architects in Los Angeles, I get sponsored businesses at the very top of my results, then it’s sponsored results.

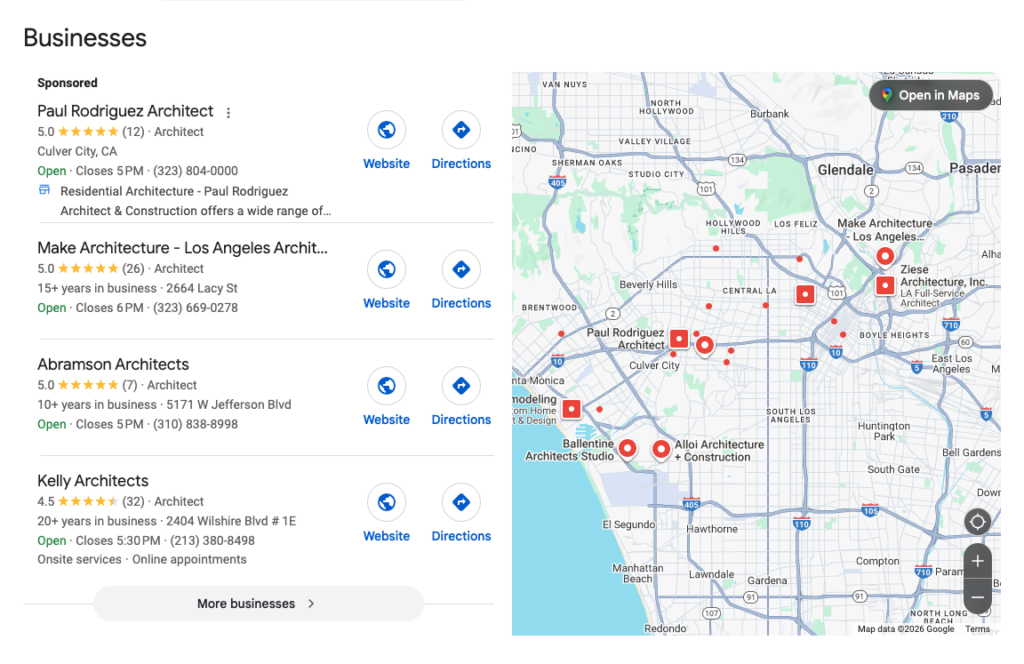

After that I get my first taste of organic results with Google Business Listing.

And after that I get the real listing results. Top Three results for me, writing in Harbor City, CA right now is Houzz, Architizer and HKS Architects. It’s taken me a couple of weeks to put together this piece and during my initial research the third result was Abramson Architects followed by Lehrer and then the AIA of Los Angeles.

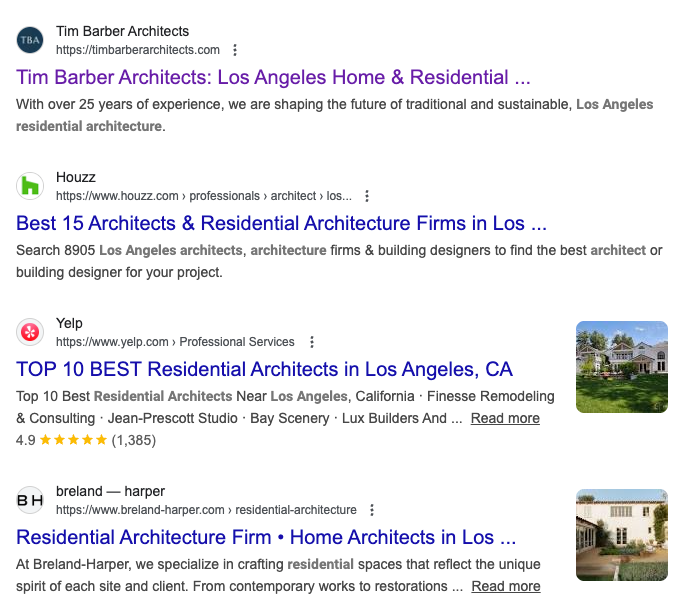

But, and it’s a big one, if I look up “residential architects in Los Angeles,” we get something incredible. Sure there are still sponsored ads and business listings, but at the top of the search results you get tim barber architects. And this space is competitive, it’s constantly shifting, but Tim Barber Architects is always at the top.

In the spots for second or third in Los Angeles outside of Houzz/Yelp or some other major player (well-financed media/ tech or real estate entity) are SPF: Architects and Breland.

We’re going to talk about why Tim Barber Architect ranks #1 in search consistently.

And we’re also going to explore how all of these sites are in top spots and what to do about carving your own space in search in 2026. Even though in 2026, organic search results are buried under a lengthy scroll of ads and rich snippets (rich snippets are google business listings, product recommendations, ai summaries and things like that), organic search is still the engine that drives web traffic. AI chat companies are using google search results as part of its summary strategy, good search placement will drive down the cost of your ads on google and

The space in architecture is dominated by fairly monied entities like Houzz, Architizer and Yelp, but by comparing Tim Barber’s SEO profile to Houzz, Architizer, and other top-ranking competitors, we reveal effective strategies and reverse-engineer what actually works.

SEO for the Big Guys in Architecture

The big guys in architecture when it comes to search are obviously the most talented architects. Just kidding, it’s aggregators.

So, why does Houzz rank so well on Google?

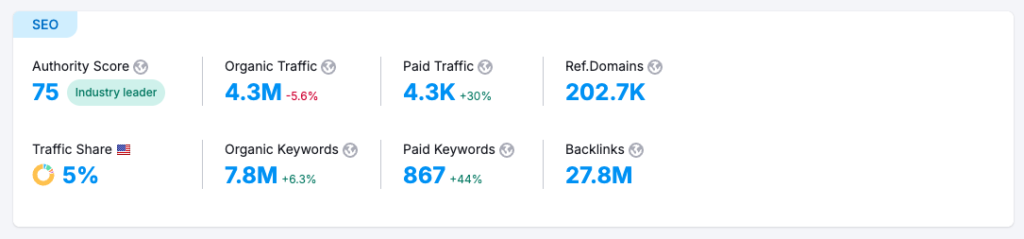

I’m using SEM rush to analyze website data. Houzz has an authority score of 75, they have 27.3 M backlinks and they get around 4.3M visits per month.

Top Keywords are very user oriented: home decor ideas, laundry room ideas in essence competing with Pinterest. We consider this the top of the funnel in digital marketing terms.

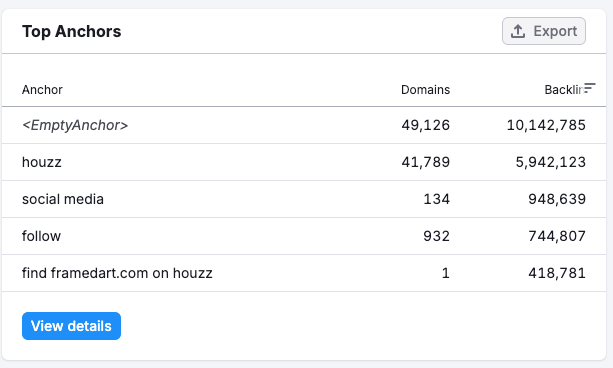

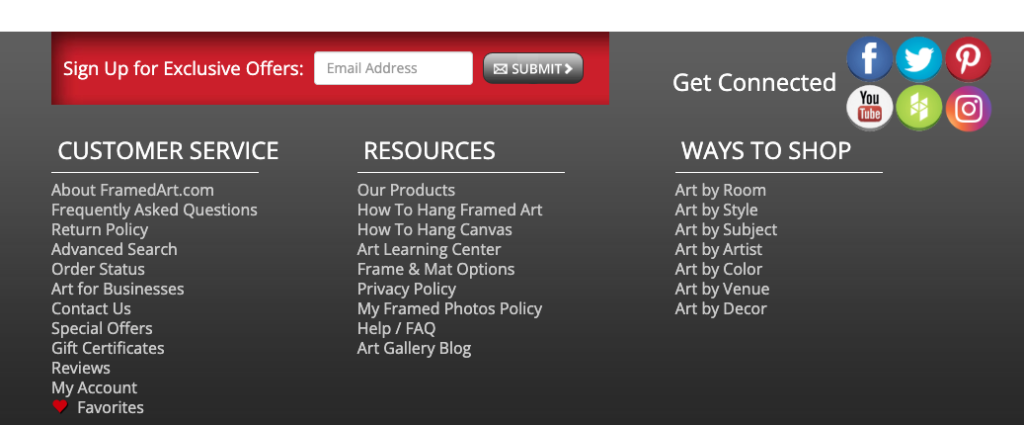

The top anchor information is instructive. For “find framedart.com on Houzz”, that means one website has almost half a million backlinks to HOUZZ. Here’s what that site looks like.

That’s an example of one massive site where the Houzz button is a link that is on every page. If you look at the backend, you’ll find the alt tag reads “find framedart.com on Houzz.” Mystery solved.

The top anchor <empty anchor> is also an image, but with no alt tag. Mini-takeaway here: alt tags and links are important. 50K domains have linked to it with an empty anchor, but it’s produced 10M backlinks. HOW?

This is typical when a site adds your logo and link to a footer or header menu. It’s a classic “badge” strategy and it works really well for Houzz. With 27 million backlinks, and 200,000 domains pointing to it, they are able to create any page, do some on page optimization and rank first pretty quickly.

This is a good time to explain what a link means to the SEO professional. Links are votes of confidence. As an analogue, in a conversation, it sounds like a person (website or page) saying “spanish revivalist architecture is making a comeback,” and in the quoted text there is a source (an article in dwell or Luxe) as to why they believe that. That source, that underpins their claim shows authority in a given subject and is the backbone of search results.

Why does Architizer rank so well on Google?

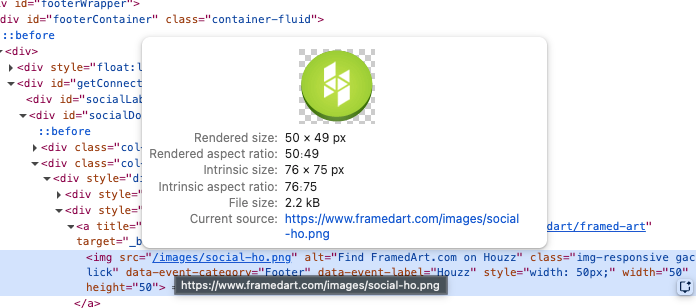

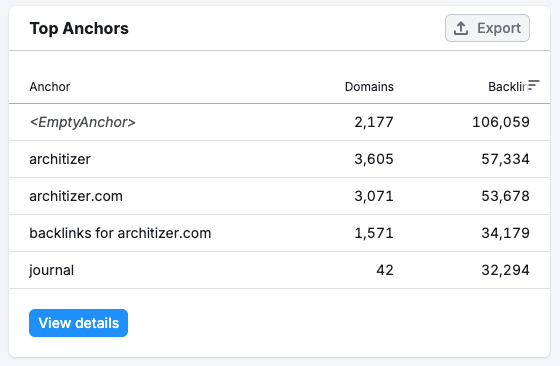

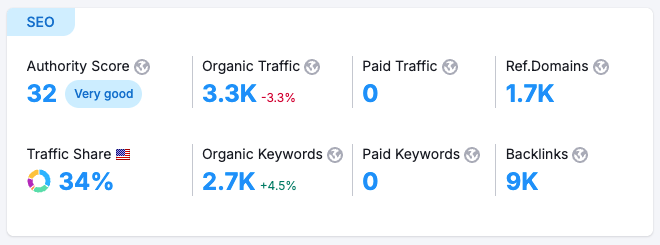

Architizer has an authority Score of 52. With around 215K visits per month and 1M backlinks.

They’re more magazine than aggregator, but bring in a good deal of traffic with informational articles like a listicle on the jeddah tower or the apple headquarters project which brings in 20K visitors per page a month (about 20% of traffic). They’re also creating lists of local architects by region capturing top spots in highly lucrative local searches as shown in the original search result image for “architects in Los Angeles.”

The anchor link situation is similar to Houzz, lots of firms add Architizer or Dezeen to their footer and provide a link.

The real genius behind Architizer strategy is that their process builds momentum. When they announce a design competition, people submit projects and share them across all mediums including their websites.

It also provides Architizer with something to talk about and people to talk to for subsequent articles and marketing campaigns. At the end of the contest, all the winners and runner ups add the press mention to their own site, they do this at least annually, with room for specific contests, across multiple categories.

Additionally, the prestige encourages architects to create architizer profiles and add badges to their sites. Whenever their work is featured, they add another link to the site. It’s a very asymmetric relationship and fairly under-analyzed.

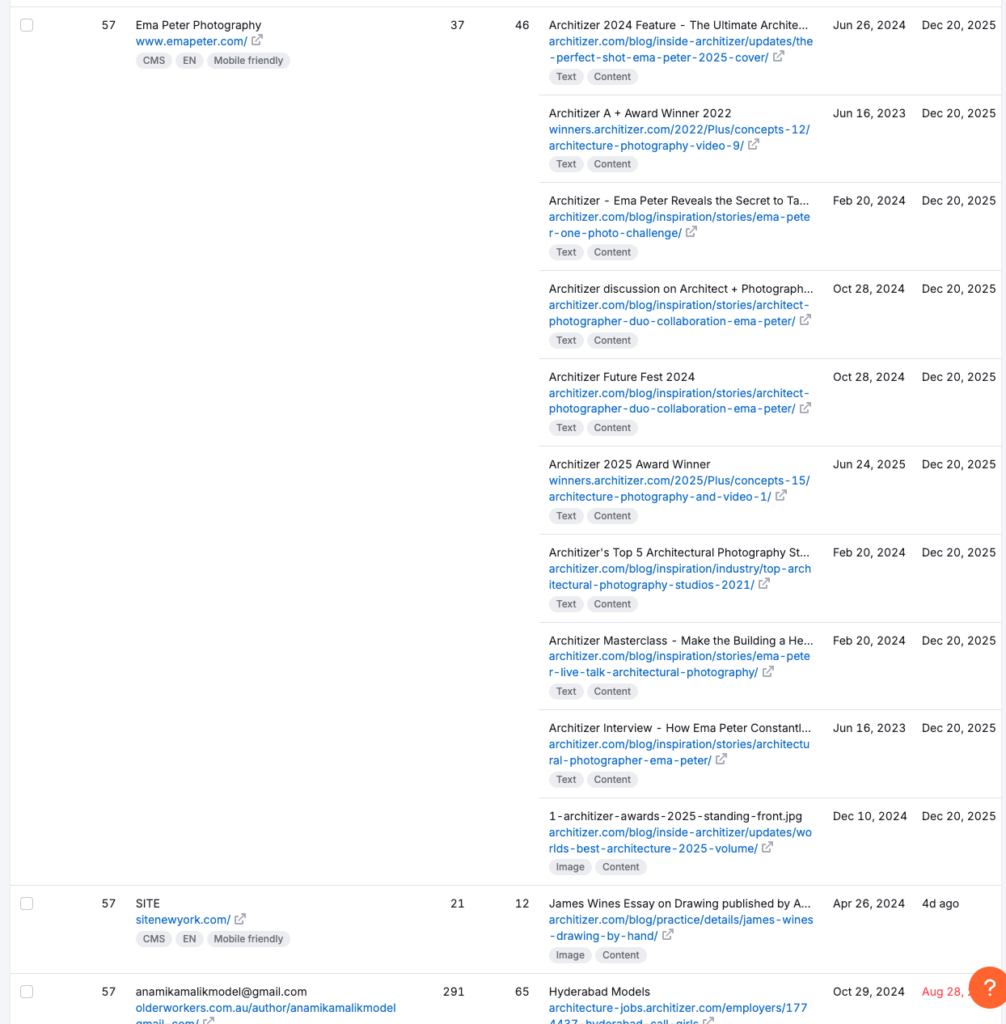

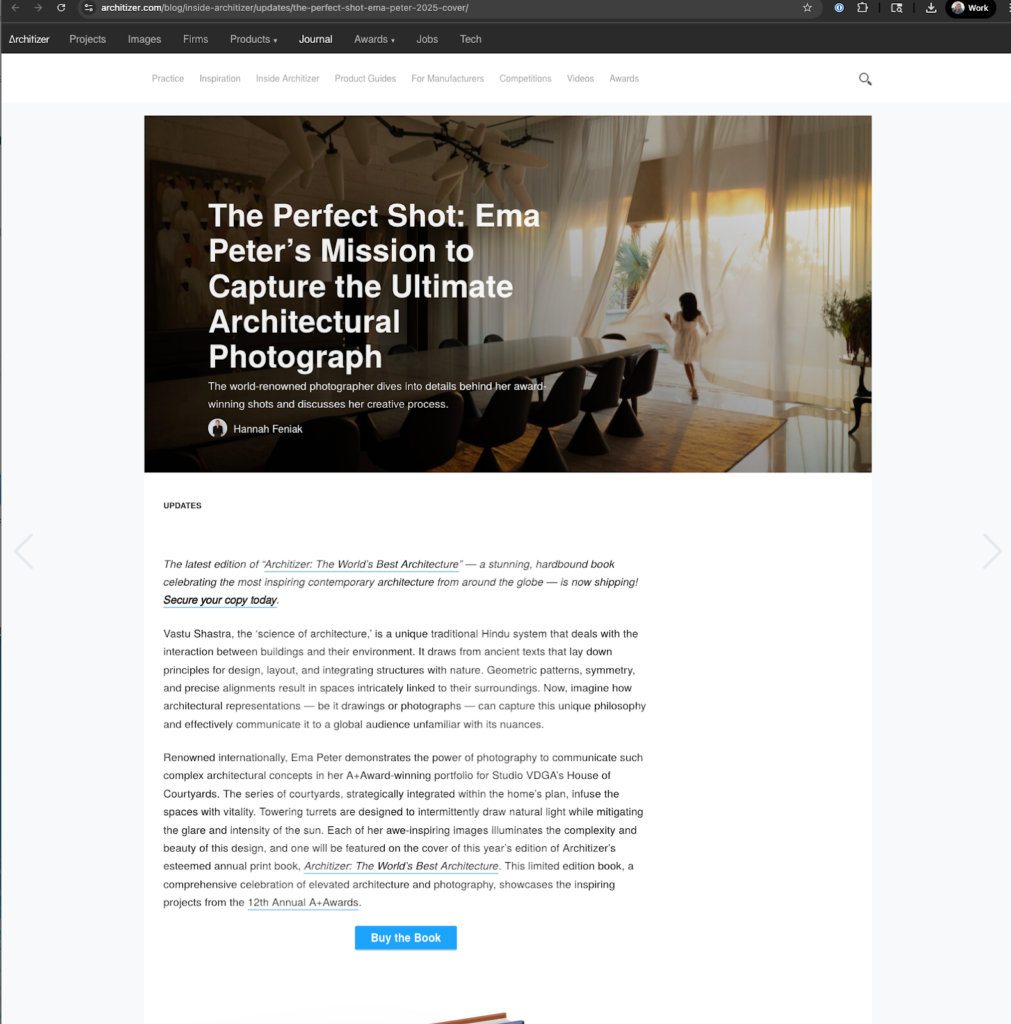

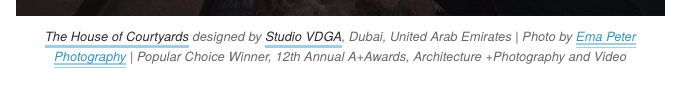

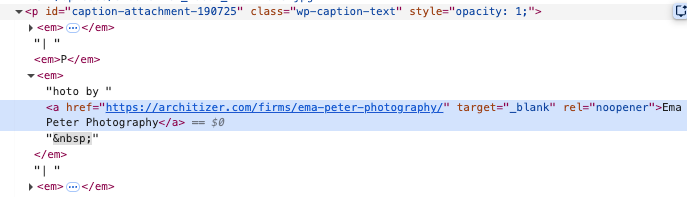

In the case of Ema Peter photography, the photographer has provided 10 backlinks to architizer. Going back to our analogy, this photographer has vouched for Architizer 10 times. The question is does Architizer vouch for Ema Peter? Sort of…

there’s a link to her. Unfortunately it leads to…

It leads to here Architizer profile page. Which to their credit does have a follow link, but the back and forth of link trade off in general is unbalanced. Mostly because people aren’t aware of how to write code, particularly the attributes associated with hyperlinks

DO FOLLOW VS NO FOLLOW

Links point to sources that they trust. In seo jargon we call that link juice. Search engines like google tally up the links, categorize and do some math to arrange search results. When writing a link in HTML markup you have options to add attributes. The attribute we’re going to talk about is the “rel” attribute, which provides specifics about the relationship between the current page and the page linked.

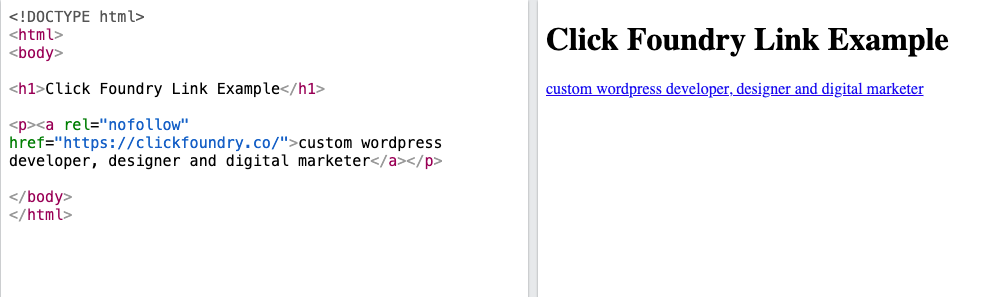

When a link is written like this:

<a rel="nofollow" href="https://clickfoundry.co/">custom wordpress developer, designer and digital marketer</a>That tells the search engine that the link is not endorsed. This is a matter of link hygiene and it’s a two-way street. There is no reason your firm should be passing link juice to Architizer or HOUZZ indefinitely on every page. You might like the logo and thinks it signals trust, my suggestion is to stick a “nofollow” attribute on it. This allows you to be more intentional with how you use your backlinks.

Looking back at the bigs

The big guys use the lure of prestige or leads and position themselves as industry leaders. They take that trust you have in them and then offer users/subscribers a badge. It’s an ongoing and expensive strategy (the messaging, culling through all of those submissions) providing the infrastructure to keep Houzz in first place. And with AI pulling from search SEO will have greater and greater relevance in the coming years.

Community based organizations

Why does the AIA rank so well on Google?

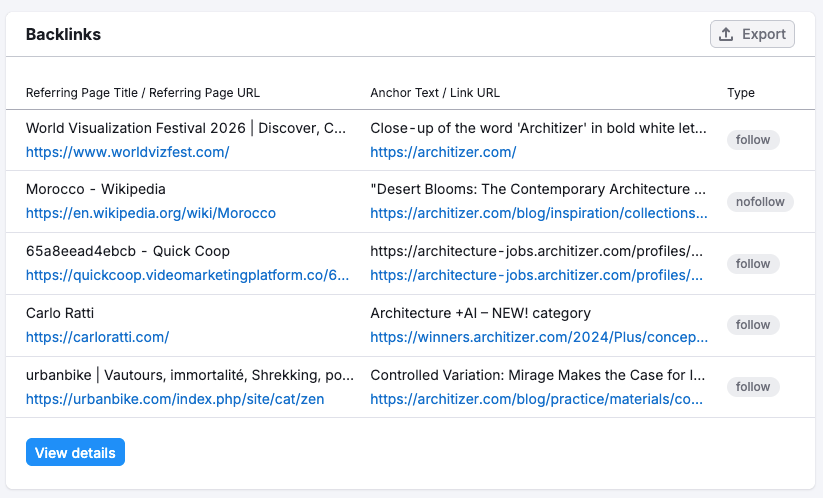

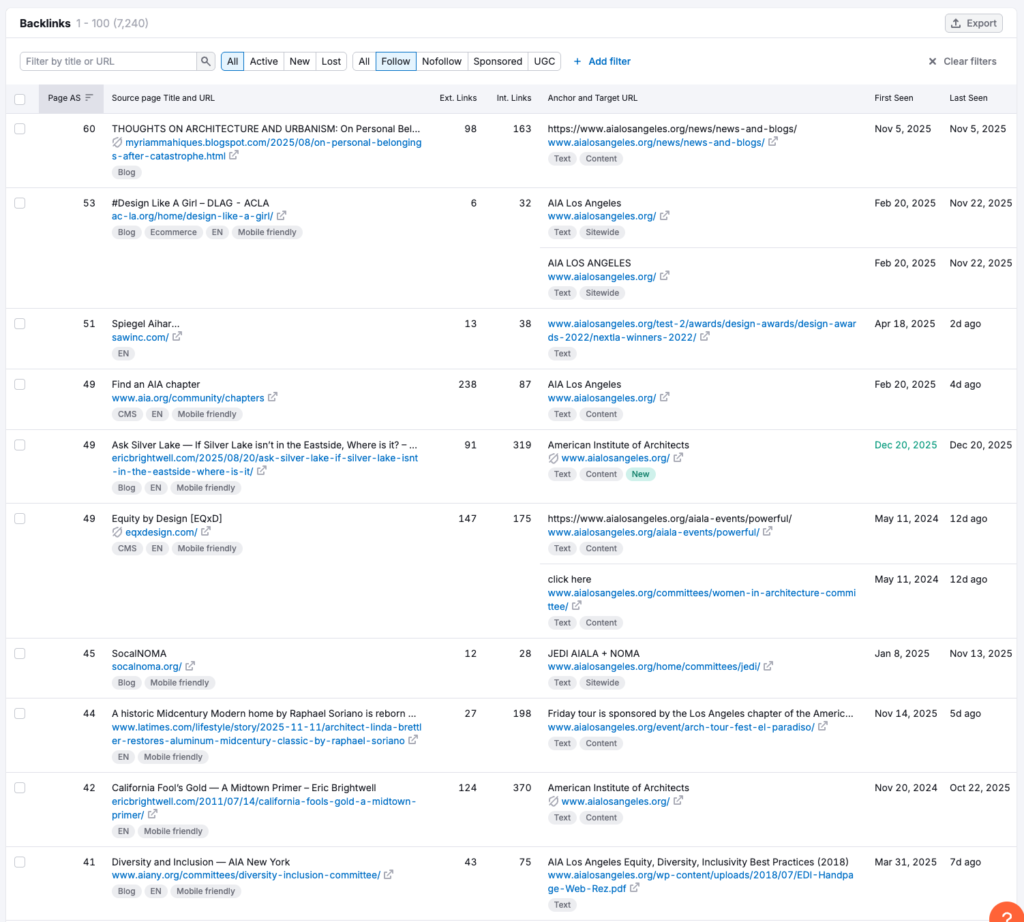

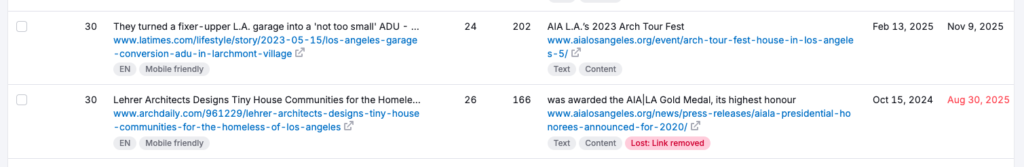

AIA Los Angeles has an authority score of 32, with 9K backlinks out of 1.7K domains. There are blogs that cite the AIA just as an authority about architecture, presumably because of write ups on niche subjects. They also acquired links from other LA based organizations that have partnered with AIA-Los Angeles like the second backlink listed for the “Design Like a Girl” initiative. There were also hundreds of architects that use their industry profile from the AIA-LA website.

The AIA earns media, perhaps from pro-active PR activites or maybe just from them being the local AIA organization. The LA times and the NY times cites the AIA Los Angeles chapter as a source various times. Other notable high value backlinks (domains with authority) include Wikipedia.

Independents

Why does Abramson rank so well on Google?

We’re about to get practical. From 200K websites linking to you (Houzz) to a little under 500, let’s explore what a high performing firm does to get in the top three of search results in a major city.

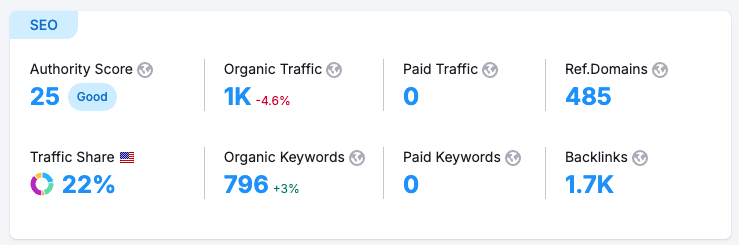

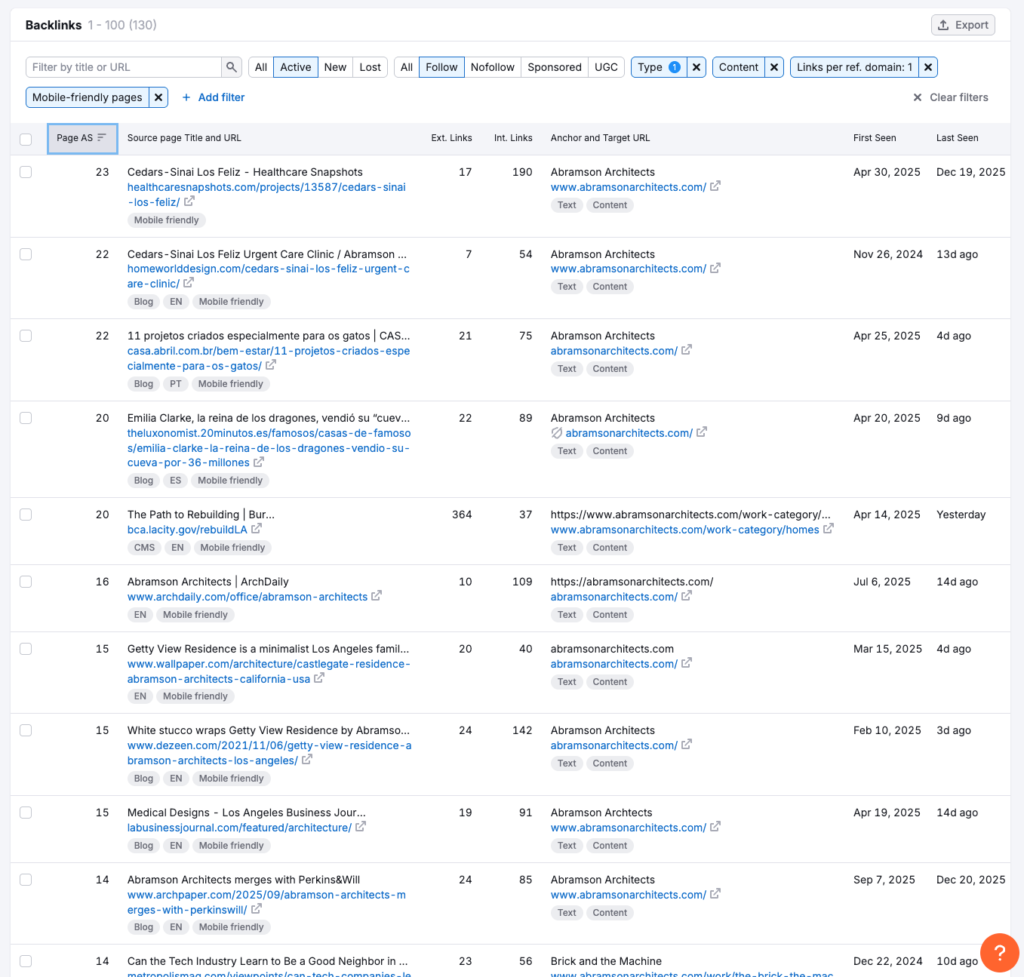

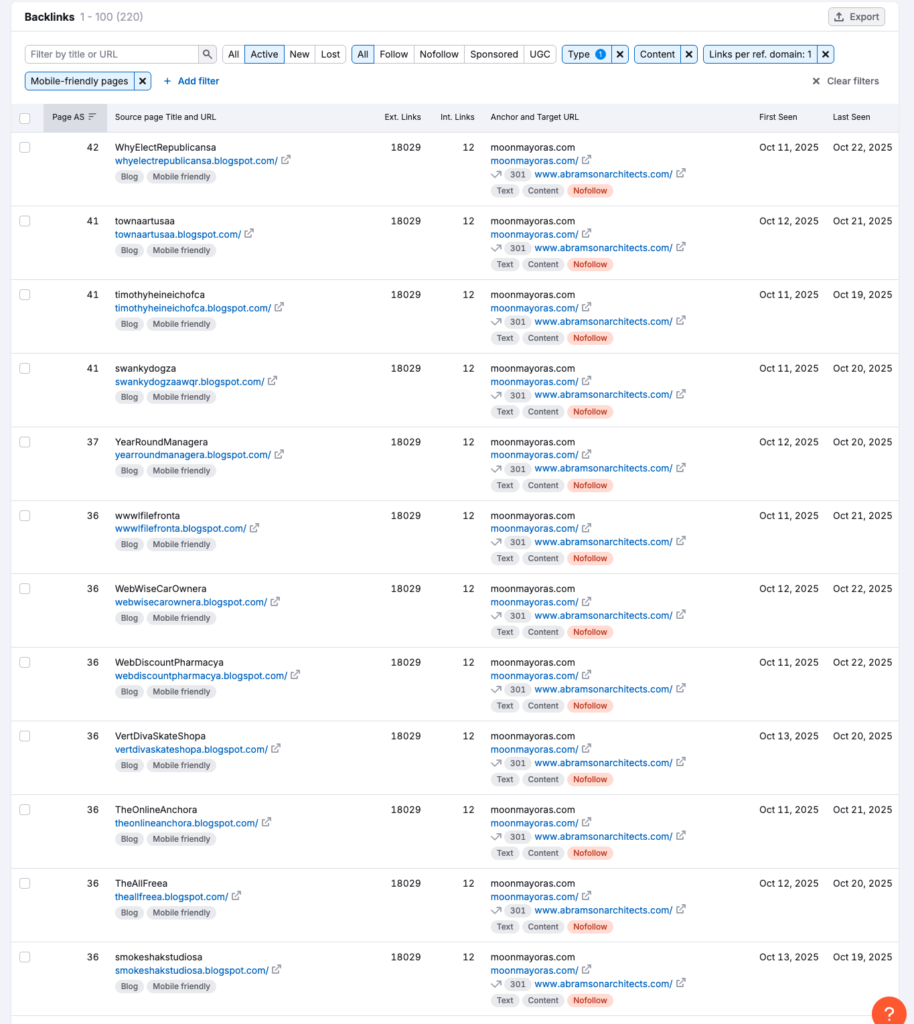

Abramson has an authority score of 25. They get about 1500 monthly visits with about 450 referring domains and and 1600 backlinks with about 400 of them being “no-follow” links most likely on blogs, social media platforms and aggregating sites like Yelp, Houzz and Dezeen. In 2025 they gained almost 400 backlinks.

If you take a look at all of the backlinks provided above. You’ll be able to split them between lower-end curatorial sites, established press outfits, professional organizations and spammier backlinks.

Magazines like Wallpaper* will have an editorial vision or partnership programs and you’ll have to submit your work and cross your fingers on getting traction. Whereas something like “HomeworldDesign” has a fairly easy contact process

Simultaneously, they have a lot of blogspot subdomains with thousands of backlinks:

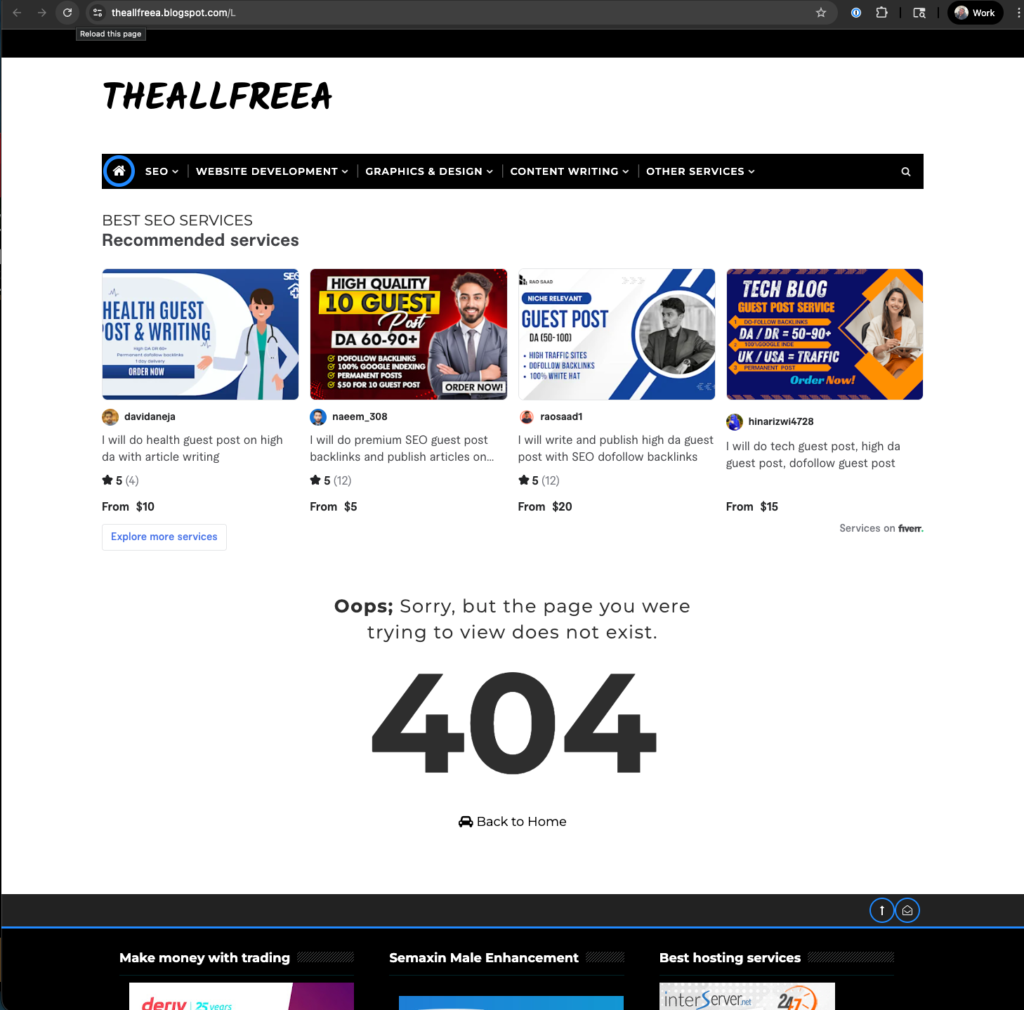

Blogspot subdomains carry a good deal of authority. I noticed that the links were hidden and carry a nofollow relationship attribute. The nofollow attribute would negate their importance. That said, there are a few SEO specialists that doubt the word from on high(Google). If you go to one of these blogger style pages, you’ll see this:

A page selling SEO services off fiverr. These are quite risky, because when google finds these networks of links, the domain authority will go from high 30s to 0 overnight, crashing your rankings. This happens with SEO consultants and clients might not know.

How does Tim Barber Architects rank FIRST Google?

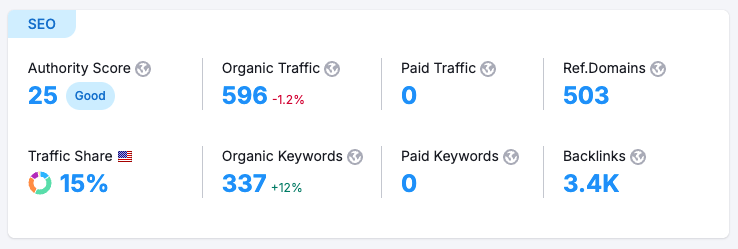

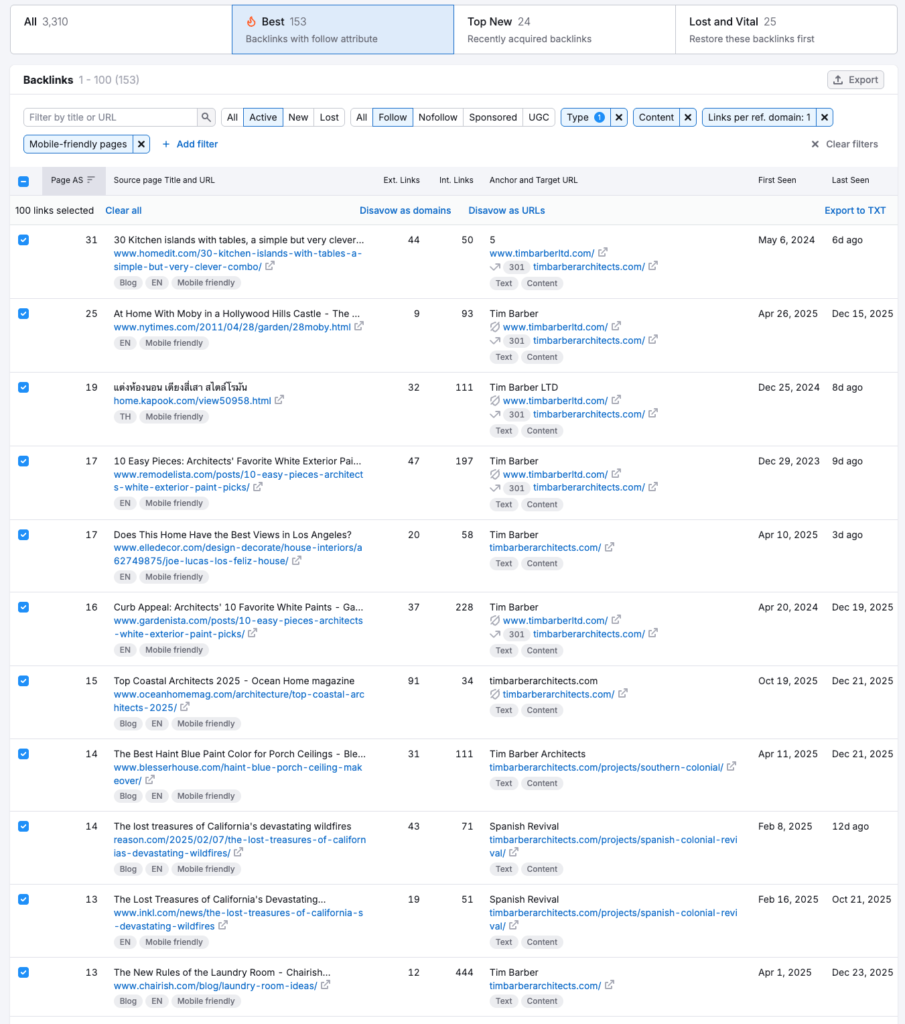

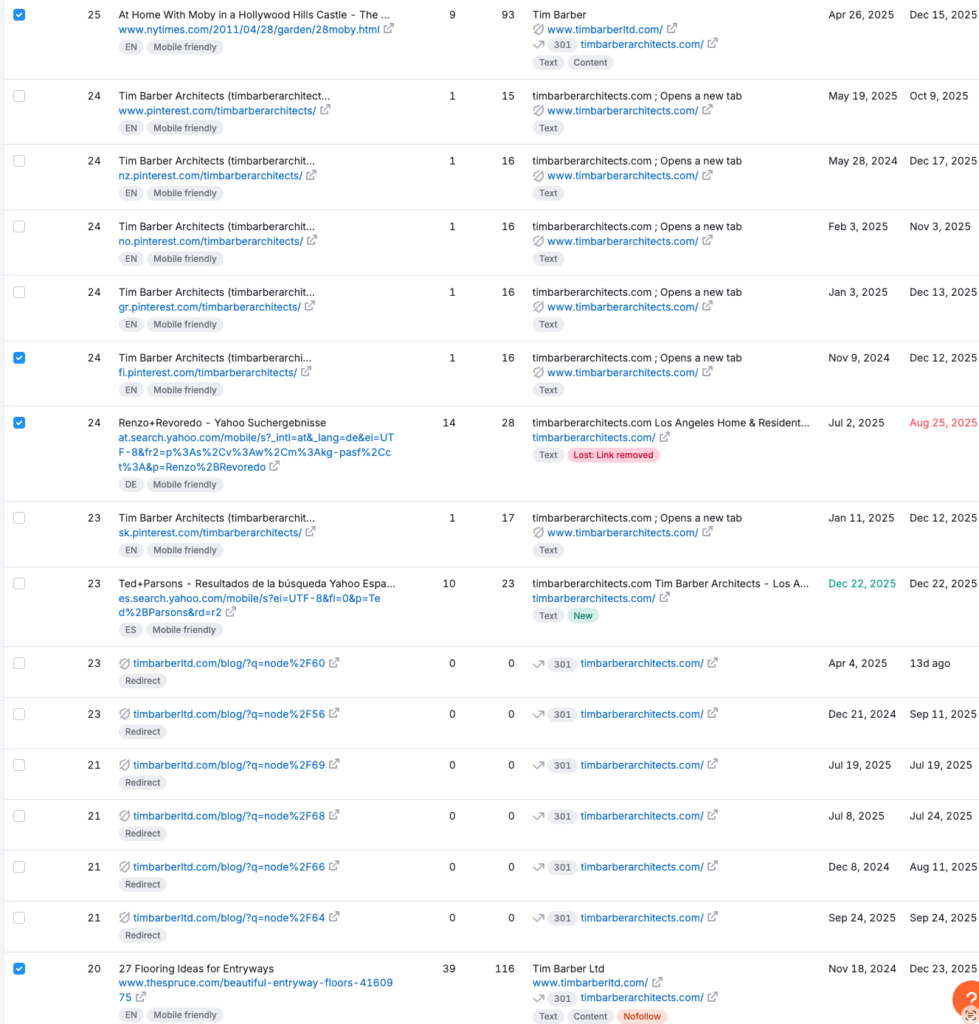

Tim Barber ranks first for “residential architects in Los Angeles” and outside of pretty good on-page seo for an architect, he also has a solid backlink profile. He has an authority score of 25 in SEMRUSH, the same as abramson, but he has 3.4K backlinks with 500 referring domains, about 850 are no-follow links.

Below are some of his best backlinks. There is plenty of opportunity here for a backlink, Homedit for instance has a submission email available as well as submission guidelines. Theres an article from the NY times about high profile homes.

Someone is helming their SEO strategy and doing quite a good job. Their website has 99 blog entries and highly searchable titles on his projects like: Spanish Colonial Revival. Additionally, there are some good PR relationships at work here. Tim Barber is a source for interior design listicles, pieces on the LA vista of a particular project of his and even trends about Laundry rooms. That’s what it takes to win LA.

So far, we’ve identified Barber’s strategy as easy project submissions, good press and solid on page SEO. Similar to Abramson, they also have spammy no follow links:

And when you go there, you can’t find the link anywhere because it’s hidden in the html markup ( a common and old school spammy SEO trick).

Despite the Goliath of aggregators, Barber has been able to rank #1 through savvy PR, submissions and solid on page SEO strategy.

So what’s the takeaway?

You have three paths:

1. PR: Develop relationships with publications and journalists, send out press releases and media kits.

2. Submit Your Work: Document your best projects, submit to publications with open calls (Homedit, ArchDaily, regional design magazines), enter competitions that generate press. Free, but time-consuming.

3. Leverage your network: This is the overlooked play. As an architect, you already work with craftspeople and specialists who have websites and audiences:

- Your cabinet maker probably has a blog showcasing custom work

- Your marble supplier features projects on their site

- Your interior designer writes about collaborations

- Your structural engineer has case studies

These are natural, contextually relevant backlinks. Reach out and propose: “Hey, our project together turned out great – want to feature it on your site?” They get content, you get a do-follow link from a relevant domain. Zero cost, completely legitimate, and Google loves this kind of organic relationship.

With Houzz and other aggregator networks vying for top spot, SEO strategy is a continuous endeavor. Barber and Abramson built great work, documented it and hustle for coverage, or they paid someone to do it or them. There’s no magic shortcut – just good work and consistent outreach, whether you do it yourself or hire it out.